The invisible base that guarantees that modern high performance IT systems work with speed and precision.

When you think of AI, images of futuristic robots or autonomous cars can come to mind. What might not come to mind are the heroes of little -known material components that quietly allow such complex systems. Among these, PCI Express (PCIE) switches may seem to be a boring subject to write, even less to read. But here is the torsion – they are nothing less than revolutionary in the empowerment of the workloads of the AI. Far from being just functional equipment, PCIE switches are the invisible foundation that accelerates data processing, eliminates the bottlenecks and guarantees that modern AI systems work with buzzing speed and precision.

Understand PCIe switches: structure, capacities and topology

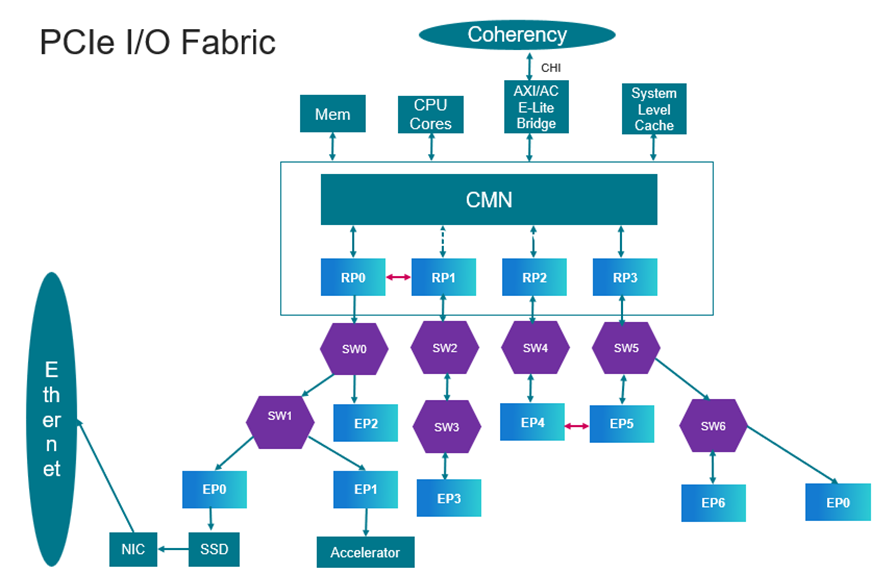

PCIE switches are intelligent multi-sporting devices that serve as a skeleton of evolutionary and high performance IT systems. Architectural, a PCIE switch includes a port upstream connected to the Racine port and several downstream ports (connected to ending points such as GPUs, SSDs or FPGA). Internally, it has a non-blocking transverse bar switch, routing logic, a non-transparent bridge (NTB) with a retication function for inter-domain communication and telemetry.

What makes PCIE switches essential in AI and data center environments is their ability to scale connectivity and bandwidth beyond what the root complex of a CPU can offer. For example, modern PCIE switches allow direct communication between peers between termination points – critical for multi -GPU IA workloads where accelerators must exchange large sets of data without CPU intervention.

PCIE topologies: How the switches register

PCIE topologies define how devices are interconnected in a system. The most common topologies include:

- Topology of trees: A single root port connects to several end points via one or more switches. This is the most common arrangement in servers and workstations.

- Multi-root topology: Several root complexes share access to a common set of termination points via a switch that supports the NTB. This is used in high availability or multi-host systems.

PCIE switches allow these topologies by acting as the central center which effectively transports traffic between devices, allowing the design and expansion of the flexible system.

PCIe switch vs Racine Port VS final point

- Root port: The origin of the PCIE hierarchy, generally integrated into the CPU or the chipset. He initiates transactions and manages the configuration and control of downstream devices. However, without a PCIE switch port, the connection is limited to a single device of termination point.

- Final point: These are devices that consume or produce data, such as GPUs, SSDs or NICs. These are leaf nodes in PCIe topology and count on the root port or change to communicate with other devices.

- PCIE switch: Positioned between the Racine port and the end points, the switch allows several termination points or PCIE switches to communicate with the root port and between them. Unlike a root port, it does not launch transactions but facilitates them. Unlike a termination point, it does not consume or produce memory data, but uses them effectively. A PCIE switch contains an upstream switch port, which connects to the Racine port or other downstream port switch, and one or more downstream switch ports which could be connected to other PCIE switches and terminal peripherals, allowing the fabric extension.

Key capabilities of PCIe switches

- Peer transfers: Allow the ending points to communicate directly, bypassing the CPU.

- Tracking: Dynamically divides that X16 links to smaller widths (for example, 4 × 4) to optimize the bandwidth allocation.

- Bandwidth: Adjust how the bandwidth is distributed according to the demand for traffic, congestion and priority.

- CXL compatibility: Supports the pooling of memory and coherent memory sharing in AI workloads.

- Integrated retirimers: Maintain the integrity of the signal at high speeds (up to 64 GT / S in PCIe 6.0).

- Telemetry and diagnosis: Monitor the health and performance of links in real time.

- Weak latency: The very low latency makes the PCIe switch ideal for communication of the internal system compared to the Ethernet protocol. Its typical latency varies from 1 to 5 microseconds, while the communication of the Ethernet network based on VLAN generally leads to latency between 10 and 100 microseconds. This significant difference makes the PCIE switch an attractive solution for the expansion of the fabric in high performance systems.

In short, although the root ports and the end points are roles with a fixed function in a PCIE topology, the switch is the dynamic catalyst which provides scalability, flexibility and optimization of performance with minimum latency. This capacity is particularly critical in AI -led systems, where an effective data movement is the key to overcoming the bottlenecks.

Why are PCIE switches essential to AI?

By nature, AI systems are designed to have immense data flows, whether for the training of automatic learning models, the execution of neural networks or the execution of analyzes in real time. To achieve this, processors like CPUs, GPUs and FPGAs must exchange data at incredible speeds. This is made possible by PCIe, offering a high -speed and low latency communication protocol. However, as AI applications require material integration and higher data flow, standard PCIe links cannot follow. This is where PCIe Commations shines – educating evolutionary dynamic connectivity which quietly feeds the performance of AI behind the scenes.

Unlike traditional switches, PCIE switches have been designed to meet the unique requirements of complex systems. These switches manage the data flow between the components, balance the workloads and guarantee transparent communication, which allows AI systems to operate reliably under increasingly demanding workloads. What was previously a niche component is now something whose industries cannot build AI systems.

Rewriting the story on PCIe switches

PCIE switches may not be as glamorous as new brilliant AI algorithms or revolutionary robots, but they do more heavy load than you think. They have quietly become the hidden engines behind AI transformations in areas such as autonomous vehicles, health diagnostics and IDGE computer. The next time you hear “PCIE switches”, do not think boring – consider yourself to be quietly fueling the greatest advances in AI, which does anything but ordinary.

Cadence offers a robust PCIe switch with upstream and downstream ports, even rationalizing the requirements of the most complex series layer and the protocol layer. Our Switch ports resalate the subtleties of phy, data link and transaction layers – designers who focus on focusing on innovation on the application layer of their SOC fabric. This includes the integration of termination points, additional switches and peer interconnections (P2P). Whether you architect a dense interconnection topology or assess PCIe connectivity beyond your SOC border, Cadence simplifies the heavily – allowing an agility, efficiency and flexibility of advanced design.

Vanessa does

(all messages)

Vanessa Do is a senior product marketing director at Cadence.