AThe models of virtual cells piloted by I represent one of the most promising and ambitious, ready to guide revolutionary experimental studies and health and human diseases discoveries. However, the field of organic AI has been slowed down by a major technical and systemic bottleneck: the lack of reliable and reproducible references to assess the performance of biomodeles.

Without unified evaluation methods, the same model provides different performance scores between laboratories – not due to scientific factors, but variations in implementation. This forces researchers to spend three weeks building personalized evaluation pipelines for tasks that should require three hours with an appropriate infrastructure. The result? Precious research time turned away from discovery discovery.

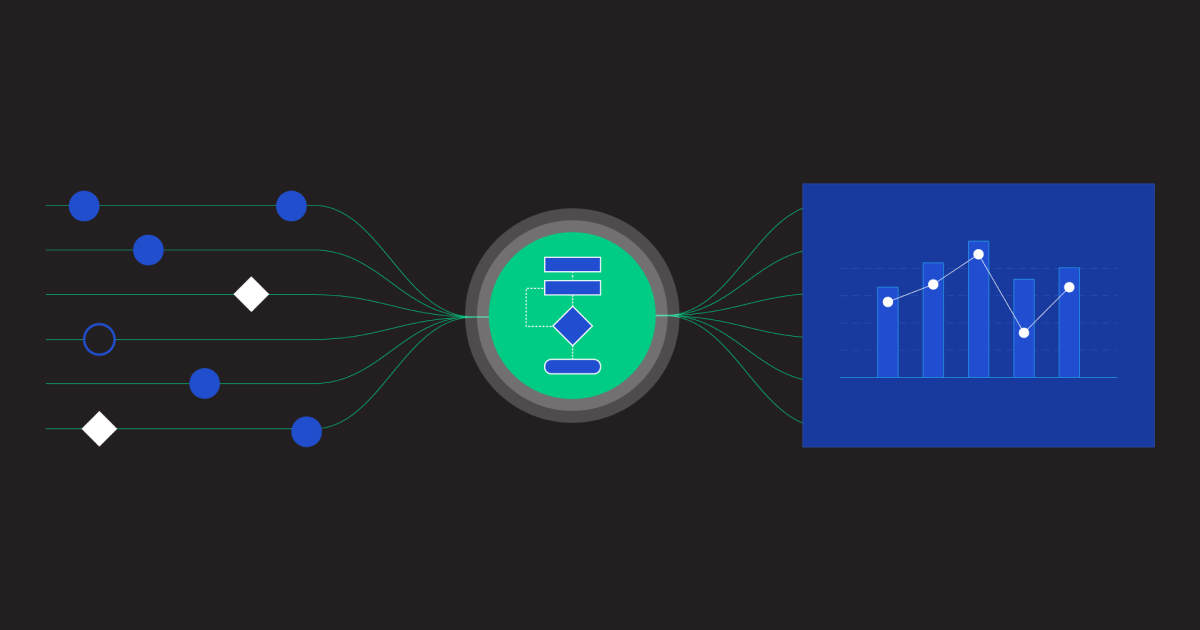

In collaboration with industry partners and a community working group initially focused on unique transcriptomics, Chan Zuckerberg Initiative published the first suite of tools to allow a robust and wide complex analysis based on tasks to stimulate the development of virtual cell models. This standardized toolbox provides the emerging field of virtual cell modeling with the capacity to easily assess biological relevance and technical performance.

The impact is immediate: model developers can spend less time finding Evaluate their models And more time to improve them to solve real biological problems. Meanwhile, biologists can confidently Evaluate potential models Before investing a lot of time and efforts to deploy them.

Built for the community, with the community

The new CZI comparative analysis suite meets a recognized community need for more usable, transparent and biologically relevant resources. Following a recent workshop that summoned experts in automatic learning and computer biology of 42 best scientific institutions and engineers – notably CZI, Stanford University, Harvard Medical School, Genentech, Johnson & Johnson and Nvidia – The participants concluded in a pre -impression This measurement of the AI model in biology has been prey to challenges of reproducibility, biases and a fragmented ecosystem of resources accessible to the public.

The group has highlighted several areas in which current reference efforts are not late. Often, developers of models create tailor -made markers for individual publications, using personalized and unique approaches that present the forces of their models. This can lead to results selected by cherry which seem well in isolation but are difficult to cross -check or reproduce in practice, slowing down progress due to the lack of real comparability and confidence in the models.

In addition, the land has struggled to over-adjust the static references. When a community aligns too closely around a small fixed set of tasks and measures, developers can optimize the reference success rather than biological relevance. The resulting models can perform well on organized tests but fail to become widespread in new data sets or research issues. In these cases, comparative analysis can create the illusion of progress while blocking a real impact.

With the knowledge of these shortcomings, the CZI team collaborated with community working groups and industry partners to build and design a standardized comparative analysis series. The resulting resource is a lively and scalable product where individual researchers, research teams and industry partners can offer new tasks, contribute evaluation data and share models.

Designed to build better models, faster

Czi’s comparative analysis suite is available for free on its Virtual cell platformDesigned for generalized adoption in expertise levels. Users can explore and apply comparative analysis tools paired with their technical history. For example, developers can opt for one of the two programming tools: a command tool This allows users easily to reproduce the comparative analysis results displayed on the platform or an open source Python package, Benchmarks CzCo-developed with NVIDIA, for the integration of assessments alongside training or inference code. With only a few lines of code, comparative analysis can be performed at any development stage, including intermediate control points. The modular package fits perfectly with the monitoring tools for experiences such as tensorboard or MLFLOW.

Users without calculation backgrounds can engage with the interactive without code web interface To explore and compare the performance of one model with others. Whether optimizing their own models or assessing existing users, users can sort and filter by task, data or metric set to find what matters most to their research.

The initial publication of CZI’s comparative analysis suite includes six tasks widely used by the Biology Community for Czi’s unique analysis and contributors, in particular NVIDIA, the Allen Institute and a working group of the cell cell community: the prediction of the sequential order and the transfer of label of the label of cross-species. Unlike comparative analysis efforts that often based on unique measurements, each task of the CZI toolbox is associated with several measures for a more in -depth view of performance.

“As a researcher in AI researcher, I count on references to understand the performance of the model and the guidance improvements,” explains Kasia Kedzierska, researcher at the AI of the Allen Institute. “Cz-Benchmarks serves as a precious community resource, with unified data, models and tasks, which is dynamic and open to contributions. Our team at the Allen Institute was happy to contribute a set of data on the immune variation and the response of flu vaccines, as well as the reference task.

“One of the greatest challenges of comparative analysis today is that, beyond combing through literature, researchers often have to implement and test the models themselves to see how they behave in a context of specific study,” explains Rafaela Merika, a graduate student at the Earlham Institute. “This makes the systematic reference and based on the community particularly critical, because shared and impartial evaluations can considerably reduce this workload, which allows us to spend more time advancing science, rather than continuously prosecuting and relaunching new models.”

Consult a demo of the new CZI reference command line, designed to help you compare ML pre-formulated ML models on monocellular data sets, to assess performance in clustering, integration and label tasks, and even to use your own data sets with pre-calcrated representations. With this tool, you can also easily reproduce the published reference results and accelerate your research.

What awaits us

CZI directs the development and maintenance of these tools as a living resource and focused on the community that will evolve alongside the field, incorporating new data, refining measures and adapting to emerging biological questions. Supported by a standardized and robust comparative analysis, the AI can be up to media threshing in the acceleration of biological research, creating robust models to meet some of the most complex and pressing challenges in biology and medicine today.

In the coming months, Czi develop the rest With comparative analysis assets defined by the community, including the assessment sets of data maintained, and developing tasks and measures for other biological fields, including imagery and prediction of the effects of genetic variants.

The comparative analysis of virtual cells can and must develop as a shared and evolutionary infrastructure which is transparent, reliable and representative of real scientific needs. With an open comparative analysis ecosystem, the evaluation of rigorous models will not only become easier, but an expected part of the construction of models useful in biology.

Ready to start? Assess the models,, Explore community referencesOr contribute to this ecosystem by visiting our platform or by contacting us at [email protected].