A study by UC Riverside researchers offers a practical solution to one of artificial intelligence’s toughest challenges by enabling AI systems to reason more like humans, without requiring new training data beyond testing questions.

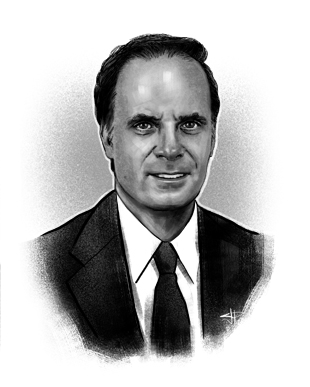

In a preprint paper entitled “Test-Time Matching: Unlocking Compositional Reasoning in Multimodal Models”, assistant professor Yinglun Zhu and students introduce a new method called Test-Time Matching, or TTM. This approach significantly improves how AI systems interpret relationships between text and images, especially when presented with unknown combinations.

“Compositional reasoning is about generalizing the way humans do and understanding new combinations based on known parts,” said Zhu, who led the study and is a member of the Bourns College of Engineering’s department of electrical and computer engineering. “This is essential for developing AI that can make sense of the world, not just memorize patterns.”

Today’s leading AI models perform well in many tasks, but they can falter when asked to align visual scenes with language under a compositional constraint, such as when familiar objects and relationships are rearranged and described in new ways.

Researchers use specialized tests to assess whether AI models can integrate concepts like people do. Yet the models often perform no better than chance, suggesting they struggle to capture the nuanced relationships between words and images.

Zhu’s team found that existing assessments could unfairly penalize models.

Widely used evaluation metrics now rely on isolated pairwise comparisons, imposing additional constraints that can obscure the best overall match between images and captions, Zhu said.

To address this issue, the team created a new evaluation metric that identifies the best overall match in a group of image-caption pairs. This measure improved scores and revealed new model capabilities.

Building on this knowledge, the researchers then developed Test-Time Matching, or TTM, a technique that allows AI systems to improve over time without any external supervision.

The method works by asking the AI model to predict matches between images and captions, selecting its most reliable predictions. The model then adjusts using these predictions, repeating the process to refine its performance. This self-improvement process mimics the way people use context to reason more effectively.

The researchers tested their method on SigLIP-B16, a relatively small visual language model designed to understand and connect visual and textual information. With TTM, SigLIP-B16 significantly improved its performance on compositional reasoning tests, meeting or exceeding previous peak results.

In testing, TTM improved the performance of SigLIP-B16 on a benchmark dataset known as MMVP-VLM to 89.4%, outperforming GPT-4.1.

“Even smaller models have the capacity for strong reasoning,” Zhu said. “We just need to unlock it with better assessment and smarter testing methods.”

The study suggests that test-time adaptation strategies such as TTM could become essential as AI expands into real-world contexts such as robotics, autonomous vehicles and healthcare, areas in which systems must adapt quickly to new situations.

Zhu’s findings challenge the prevailing assumption that bigger models are always better. Rather, it calls for a rethinking of how AI systems are evaluated and deployed.

“Sometimes the problem isn’t the model. It’s how we use it,” he said.

THE full paperco-authored with Jiancheng Zhang and Fuzhi Tang of UCR, is available on arXiv.

(Concept header image/Getty Images)