I believe that each student and teacher should be that the resolution of a problem can be helped to visualize. The steps, the logic, the moments “showing your work” may look like windows in our minds and a path to the solution.

This is why one of the most famous movements of AI, Thinking chain (Cot), feels so persuasive. The AI solves a problem in tidy compartments, going from the first step to the last as a prudent student. And in many cases, this COT treatment can improve the performance of the model.

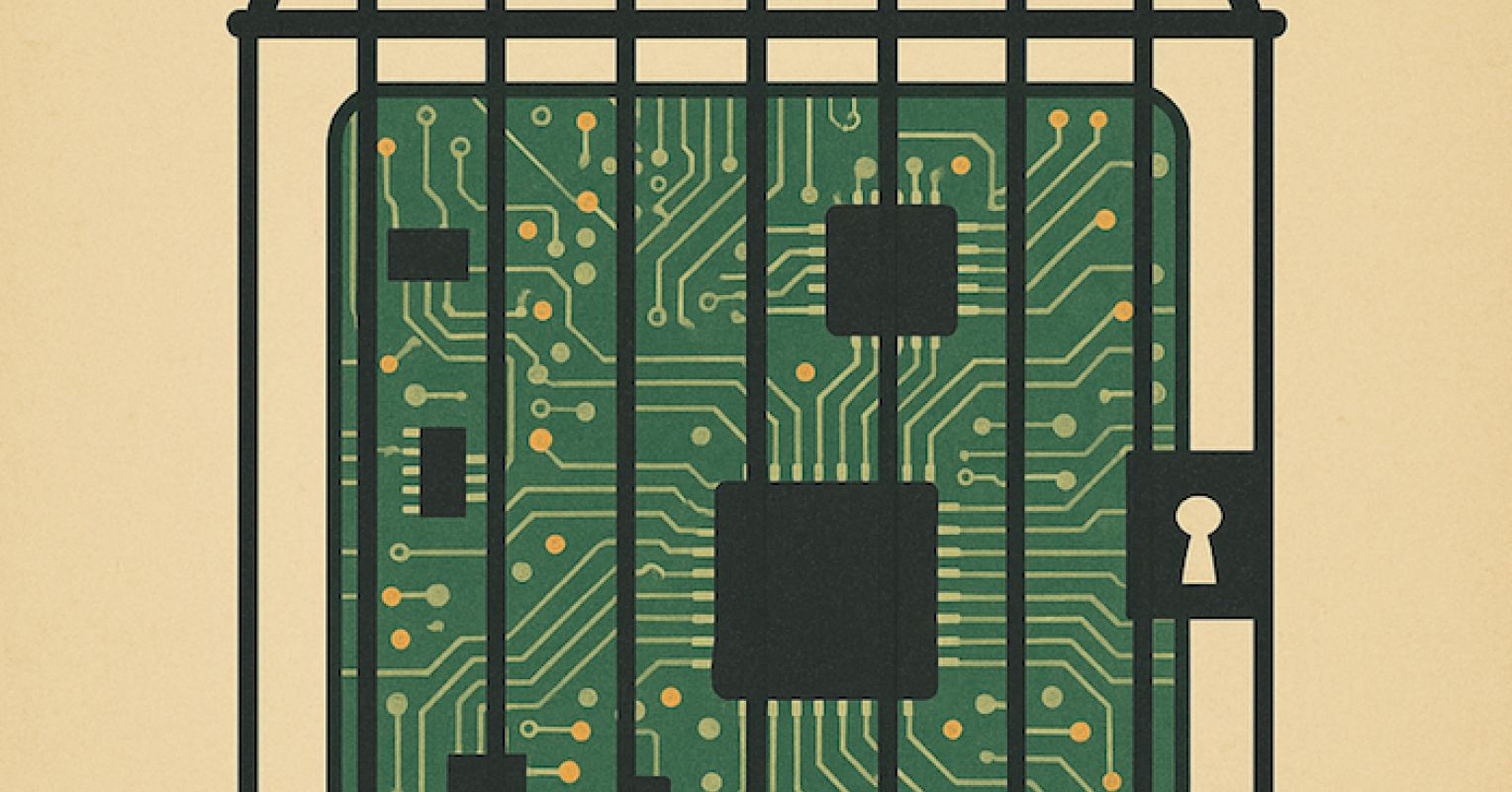

But a recent study (Pre-impression, not yet evaluated by peers) suggests that these windows may not be Windows at all. They could be, as the authors suggest, a mirage. And here again, we see a kind of functional rigidity or structural containment which maintains large models of language operating inside their utility cage. Create and try to unravel the illusion.

Build a model from zero

Instead of using a trade system like Grok or GPT-5, researchers have built their own model from zero. It was not a question of chasing performance recordings, but more clarity. By forming their system only on carefully constructed synthetic problems, they could delete the noise of unknown data and hidden overlaps. No accidental index of pre-training and no chance that the model has already “seen” the disguised test, it was a clean environment to probe the limits of the reasoning of the machine.

With this control, they could ask a deceptively simple but critical question to see what is happening when a model that is good in the step-by-step responses is pushed beyond the models on which it was formed.

The cage becomes visible

The study examined three types of “elbow” to see how the model reacted.

- Change the task –Familiar skills have appeared in a new combination.

- Change the length –The problems were shorter or longer than before.

- Change the format –The same question took a different form.

In any case, performance collapsed. The model could navigate problems when they corresponded to its training distribution, but even modest changes such as a few additional steps or a reformulated prompt caused a collapse. And in the authors’ own words:

“Our results reveal that COT reasoning is a fragile mirage that disappears when pushed beyond training distributions.”

I would call it something else, not a mirage, but reasoning in captivity. What looks like free thought is really a techno-creature creature on the field familiar with his training, unable to cross the walls that keep him.

The illusion of transfer

In simple terms, humans can take a principle learned in one situation and adapt it to another. We go from the familiar to the unknown by transporting a meaning through the contexts.

The models of this study, like each model of great language, do the opposite. They thrive in a kind of perfect statistical familiarity and wobbles outside this “technological comfort zone”. Their “reasoning” is no exception to the training distribution, it remains in cage within.

This is what I called anti-intelligence. It is not the absence of competence, but the inversion of adaptability. This is the appearance of general reasoning, but only within a narrow and often repeated statistical world.

Thinking beyond the laboratory

The research was both simple and revealing. And what he has found should be important to anyone who relies on AI for decisions. Because you are looking for a small tailor-made model or the sprawling architecture of today’s GPT-5, the underlying mechanism is the same. Everything is a statistical process predicting the next step depending on what it is seen before. The larger models make the cage larger and more comfortable. They do not remove the bars. In fact, mastery improvements often make cage more difficult to notice.

The point to take away

The chain of thought does not release reasoning, it simply reorganizes space inside the cage. The steps can be clearer and the compartments are better illuminated. But the harsh reality is that the limits remain.

And here is the key point. It does not aggravate the worse IA than us, or better. This makes him different. And these differences are not defects to be conceived but the signature of a type of fundamentally distinct intelligence. The more we try to ensure that AI behaves like us, the more these differences will stand out, sometimes surprisingly or even disturbing.

Recognizing this is not an act of resignation but the beginning of understanding, I think it is essential to understand that we do not look at defective copies of human minds. We look at something else entirely different. Perhaps even a new “species of reasoning” which is still confined, but with its own form and its own limits.

The challenge is to learn what it can be, without forcing it to become what it is not.