The semiconductor industry is increasingly turning to artificial intelligence as the solution for increasing complexity in test analytics, hoping algorithms can tame the growing flood of production data. The need to extract actionable insight from that torrent is pressing. AI/ML (AI) models promise to find correlations buried in multidimensional datasets, predict failures before they occur, and optimize test programs on the fly.

Just a few years into the AI deployment wave, however, the results tell a more nuanced story. AI is proving valuable in well-bounded applications such as predictive monitoring, anomaly detection, and adaptive limit setting, but its broader role in production test remains uncertain. The physical realities of semiconductor manufacturing, such as electrical noise, socket degradation, process drift, and incomplete telemetry, still confound purely data-driven approaches. Models trained in idealized environments often stumble when confronted with the variability and imperfection of real hardware.

AI may be powerful at finding patterns, but it cannot interpret their meaning without human context.

“From a data scientist’s point of view, we can’t let machines handle all the data,” said Jin Yu, senior test solutions architect at Teradyne. “We start with a small amount of data, build understanding, then scale. Human domain knowledge is still very important to get the most out of the data.”

As the industry moves from hype to implementation, the question is no longer whether AI can revolutionize test analytics, but where it genuinely adds value, where it fails, and where human expertise remains irreplaceable in the process.

Where AI excels

AI’s capabilities manifest most clearly in production environments where its ability to process vast datasets and detect subtle patterns delivers measurable results. Predictive monitoring, anomaly detection, and adaptive test optimization represent the clearest success stories where machine learning has moved beyond proof-of-concept into daily use.

“AI can monitor tools and make predictions about when a tool is beginning to drift within the fleet,” said Marc Jacobs, senior director of solutions architecture at PDF Solutions. “When the drift gets close to impacting yield, you can take that tool down and readjust before yield loss occurs.”

Predictive models like these identify early signs of process or equipment drift, allowing fabs to intervene before performance deteriorates. By correlating data across multiple tools and test cells, engineers can isolate systemic shifts long before they appear in yield metrics. The value is tangible — preventing a single wafer loss often justifies weeks of monitoring investment.

AI also improves test efficiency through adaptive optimization. By dynamically adjusting limits and prioritizing high-value test patterns, machine learning helps identify redundant tests and reduce total test time without compromising outgoing quality.

“AI is already making a big impact in adaptive test optimization and anomaly detection,” said Eduardo Estima de Castro, senior manager of R&D engineering at Synopsys. “Machine learning helps prioritize high-value test patterns, cut test time, and identify systemic yield issues. It also enables real-time adjustments to test limits, improving outgoing quality. These capabilities bring significant efficiency gains in high-volume production.”

At the hardware interface, AI is beginning to assist with socket health monitoring. Variations in contact resistance and signal integrity often appear as subtle electrical noise long before a socket fails completely. By training models to recognize those signatures, test engineers can schedule maintenance proactively and prevent data corruption that would otherwise distort downstream analytics.

“We assume the test instrument is perfect,” said Teradyne’s Yu. “But in actuality, it’s not. We must take into account the calibration data that might indicate the instrument needs calibration or proper cleaning. Combining the datasets from the instrument side and the device side can benefit both.”

Finally, AI has shown particular strength in yield learning. By correlating data across wafer sort, burn-in, and system-level test, machine learning can reveal latent relationships that escape traditional statistical process control. The pattern recognition works because the datasets are large, the measurements are relatively consistent, and the correlations, once found, can be validated against known physical mechanisms.

All of these success stories share common characteristics. They operate on large, relatively clean datasets. They augment rather than replace human decision-making. And they solve problems where pattern recognition at scale provides clear advantages over manual analysis. But they also highlight where AI’s capabilities end and the harder problems begin.

The foundation: Infrastructure and data quality

The semiconductor industry’s enthusiasm for AI has, in many cases, outpaced attention to the unglamorous infrastructure work that makes AI successful. Models trained on corrupted data produce unreliable results, no matter how sophisticated the algorithms. That reality is forcing a renewed focus on test socket characterization, probe maintenance, and electrical noise reduction: The physical foundation that determines whether AI can function at all.

When electrical noise from poorly characterized test sockets corrupts the signal at the point of contact, no amount of downstream AI processing can recover the original data. The corruption happens at the physical layer, before digitization, before any software touches it. This is not a problem AI can solve. It is a problem AI inherits.

“You can’t see interference corrupting data on the die,” said Jack Lewis, CTO at Modus Test. “It’s like trying to listen to outer space while somebody’s blaring their radio next to you. The signals you care about are buried in the noise.”

This challenge extends beyond initial characterization. Test hardware degrades over time. Probe tips accumulate foreign material or debris, contact resistance increases, and socket performance drifts as mechanical wear accumulates.

“What usually happens is the interconnects are characterized when the socket, load board, or probe card is brand new,” said Glenn Cunningham, director of test and characterization at Modus Test. “They don’t characterize it again through the life of that product, so over time the socket performance drifts and starts corrupting data.”

The result is systematic drift that models interpret as process variation, leading to false alarms or missed detections as the physical reality diverges from the baseline the model was trained on. That infrastructure variability creates a fundamental challenge for AI deployment. Before sophisticated analytics can run, the data must be verified. Someone needs to confirm that measurements reflect device performance, not test infrastructure artifacts.

To mitigate those issues, companies are beginning to add continuous sensing directly into the test hardware.

“Advantest created a continuously running monitor for contact resistance on power planes,” said Brent Bullock, test technology director at Advantest. “It’s an extra ADC measuring the voltage drop across the contact, so you can set alarms and react before it becomes a yield hit or a burn event.”

By treating electrical contact resistance as a live signal rather than a static characteristic, test teams can detect degradation early and feed cleaner data to their analytics engines.

But the problem runs deeper than periodic verification. The sheer combinatorial complexity of contact variability across thousands of pins makes it nearly impossible for AI models to separate signal from noise without explicit characterization of the interconnect layer.

“When the interconnect is unstable and random across thousands of contacts, it hurts everything you’re trying to accomplish with AI,” said Modus Test’s Lewis. “It injects noise into the system and creates a noise floor you can’t get below.”

Consider the mathematics of the problem. A modern high-performance processor test socket contains thousands of pins, each with its own contact resistance that varies with every insertion. The contact resistance isn’t binary, good or bad, but exists on a distribution with some pins behaving consistently and others exhibiting wide variation. When an AI model tries to correlate test failures to device characteristics, it’s actually correlating to a combination of device performance and a massively multi-dimensional space of interconnect states. The model has no way to distinguish between a marginal device and a marginal socket unless the socket’s electrical state is explicitly measured and fed into the analysis.

“If you don’t measure how much that interconnect varies and feed that into the model, your model’s wrong,” said Lewis. “Designers might say it’s 50 milliohms nominal, but in reality, it moves around a lot because mechanically it’s not static.”

The implications cascade through the entire test flow. Design models assume a fixed interconnect resistance when calculating timing margins and power delivery. But if that resistance varies by 10X or more across insertions and across the pin array, the actual device performance envelope looks nothing like the simulated one. AI models trained on test data that includes this uncharacterized variability learn correlations that are partially fiction. They’re fitting to noise rather than device physics. When deployed, these models generate predictions that are statistically plausible but physically wrong.

Where AI needs people

Even where AI performs well, the need for human interpretation remains constant. The most advanced models still depend on expert supervision for context, correlation, and causality. AI can flag anomalies or identify drift, but it cannot decide whether those changes represent real yield loss, environmental fluctuation, or test noise.

“You have to have a solid signal, and it’s hard to get that signal when you’re talking about something that’s very subtle,” said Advantest’s Bullock. “If you don’t have the right data, all the intelligence in the world doesn’t help you. You’re just guessing.”

The distinction lies in what AI is designed to do. It excels at finding statistical irregularities. What it cannot do — at least not yet — is explain them. Most anomalies are ambiguous. Without embedded process knowledge, AI’s reasoning remains opaque, which limits the trust engineers place in its results.

AI also struggles with uncertainty. A model can easily overfit to its training environment, assuming patterns are causal when they are merely correlated. Engineers compensate by validating results against physics-based models, reference devices, and historical data. In doing so, they act as the interpretive layer that ensures an algorithm’s conclusions remain physically plausible.

That human oversight is not a weakness. It’s a safeguard. The more complex the process, the greater the risk of misinterpretation. In practice, human experts don’t slow the loop. They stabilize it.

As devices become denser and test regimes more integrated, the relationship between human and AI systems begins to resemble a feedback partnership. Engineers guide the model toward relevant features, tune its training sets, and verify its predictions. The AI, in turn, helps filter noise, suggest correlations, and highlight outliers. It’s a distributed intelligence system — part algorithm, part human reasoning, with each compensating for the other’s limitations.

The hybrid approach

The most effective AI implementations in production today combine machine learning with physics-based models, explicitly defined rules, and human expertise at critical decision points. That hybrid approach leverages AI’s computational strengths while compensating for its inability to reason about physical causality or handle edge cases outside the training distribution.

“When you combine simulation data with production test data through a digital twin, you can connect what’s happening in design with what you’re measuring on silicon,” said Joe Kwan, director of product management at Siemens EDA, during a presentation at Semicon West. “That closed loop helps you learn yield faster and identify root causes more efficiently.”

Physics-based models serve as guardrails that constrain AI predictions to physically plausible outcomes. When a machine learning model suggests an adjustment that would violate known material properties or thermal limits, the physics layer rejects the recommendation before it reaches a human reviewer. That constraint prevents the kinds of overconfident extrapolations that unconstrained models sometimes generate when faced with novel conditions.

The result is a closed-loop system that balances empirical flexibility with deterministic structure. In design verification, for example, executable digital twins allow simulated test conditions to evolve alongside physical measurements. These models help engineers predict yield and reliability before first silicon, then refine those predictions using real wafer and test data.

That connection is critical to reducing ramp time for new products and nodes. Traditional approaches rely on multiple design/manufacturing iterations to reconcile differences between pre-silicon assumptions and post-silicon behavior. Hybrid modeling compresses that feedback loop by keeping both sides synchronized.

“Machine learning helps identify correlations, but it doesn’t automatically know what’s physically meaningful,” said Kwan. “That’s why we combine learning algorithms with design and process physics. The physics gives context to the data.”

AI’s role in this hybrid system is as an adaptive layer. Instead of replacing deterministic models, it fine-tunes them, learning real-world deviations that static equations can’t capture. Physics establishes the baseline. AI adjusts it in motion.

Hybridization also helps mitigate one of AI’s biggest weaknesses — data scarcity. Production test environments rarely have labeled data. True root-cause identification depends on detailed failure analysis, which is costly and time-consuming. Hybrid systems overcome this by training AI components within a physics-informed boundary, reducing dependence on exhaustive datasets.

“AI is increasingly used to close the gap between what is predicted before tape-out and what is observed on real silicon,” said Synopsys’ Castro. “By applying techniques like surrogate modeling and digital twins, engineers can continuously calibrate pre-silicon models with wafer and test data to improve accuracy in timing, power, and reliability estimates, helping reduce costly design iterations and speeding up yield ramp.”

The hybrid model is emerging as the framework for trustworthy AI in test analytics. It retains traceability and supports continuous learning without losing control of causality. For engineers, that’s the difference between a model that helps them understand and one that merely automates a task.

“If you’re in a production environment where everything needs to work, you probably don’t want the system making decisions on its own,” said PDF’s Jacobs. “You want the human in the loop until everyone’s comfortable with the AI’s recommendations.”

Making It Work in Production

The practical challenges of implementing AI in test analytics go far beyond training algorithms. They involve governance, data stewardship, and process integration. Many fabs already generate more data than their AI systems can handle. Data is siloed across tools, suppliers, and test stages, each with its own schema and access rules.

“One major hurdle is distribution drift,” said Castro. “Models trained on simulated data, such as RTL or SPICE-level predictions, often fail to generalize when exposed to real silicon measurements. Physical effects, process variations, and environmental factors that simulations don’t fully capture lead to discrepancies. Calibration loops can help, but the complexity of bridging these domains remains high.”

This mismatch between controlled simulation and production reality forces fabs to establish calibration loops: AI models that monitor AI models. Without this oversight, the system quickly loses alignment.

The issue is compounded by versioning. Design revisions, test program updates, and process changes all modify the meaning of data over time. Without standardized governance, models trained on earlier revisions can produce misleading results. Even small parameter shifts can ripple through predictive systems, invalidating months of work.

“Aligning the two worlds of design and test is far from trivial,” added Castro. “Design and test data often use different schemas and semantics, making traceability and feature engineering challenging tasks. Limited observability from sparse on-chip monitors adds another layer of difficulty, as models must infer hidden states with incomplete telemetry.”

AI’s integration into production test is therefore not just a computational problem; it’s a cultural and organizational one. The success of AI depends on cross-domain collaboration with design, test, manufacturing, and suppliers all speaking a common data language.

“The semiconductor industry is essentially becoming a software industry,” said Mukesh Khare, general manager of IBM Semiconductors and vice president of hybrid cloud, during a panel at Semicon West. “We need to collaborate more openly, bringing every aspect, from materials to equipment to chip design, together at high speed. That means being willing to share data and build end-to-end AI models that connect process conditions to device performance through design-manufacturing co-optimization.”

This movement toward ecosystem-level collaboration is gaining momentum, but it also demands trust. Data sharing across company boundaries raises concerns about IP protection and competitive advantage. The balance between openness and confidentiality is delicate, and AI amplifies those tensions by requiring large, integrated datasets to reach its full potential.

The technical foundation for that trust lies in model interpretability and physical validation. Fabs increasingly demand that AI-generated insights can be traced back to measurable process parameters. A model that produces accurate predictions without explainability may improve short-term metrics but then undermine long-term reliability.

“The future will likely favor a hybrid approach,” said Castro. “Physics-based models will remain the backbone for deterministic behaviors like timing and electromigration because they are grounded in well-understood principles. These models provide a reliable baseline, while machine learning adds flexibility by adapting predictions based on real-world telemetry and test data.”

The complexity of modern test data ensures that AI will continue to play an expanding role. But for now, its power depends as much on infrastructure, governance, and collaboration as on the algorithms themselves.

Managing drift and maintenance

Deploying AI in production test environments is not a one-time integration. It’s an ongoing engineering commitment. Models trained on historical data must be continuously monitored to ensure they remain aligned with evolving processes, materials, and hardware. Drift is not an occasional problem. It is a certainty.

“You have to monitor model drift very closely and have a clear plan for when it happens,” said Jacobs. “That means watching performance metrics, retraining with new data, and validating that predictions still make sense before acting on them.”

AI’s predictive power depends on the stability of the system it monitors. As process recipes evolve and test conditions change, the data distribution that trained the model no longer represents the environment in which it operates. If drift is not detected and corrected, the model’s accuracy deteriorates silently until its output becomes actively misleading.

The cost of this degradation is difficult to measure but easy to underestimate. Subtle errors in adaptive test limits or predictive maintenance recommendations can propagate downstream, skewing yield analyses and hiding root causes. Effective model governance requires not just monitoring tools, but policies and defined intervals for retraining, documented validation procedures, and transparent change management.

Continuous monitoring also requires infrastructure that can track the lineage of data and models over time. Each training cycle must record which data were used, under what conditions, and which version of the process they represent. That traceability enables engineers to reproduce results, compare performance across revisions, and identify precisely when and why a model began to diverge.

At advanced fabs, AI management now mirrors traditional statistical process control (SPC) for physical tools. Just as sensors are calibrated and verified at regular intervals, AI systems are validated against reference datasets to confirm that they still represent reality. The model becomes another piece of production equipment subject to maintenance schedules, certification audits, and operator oversight.

Building cross-functional competence

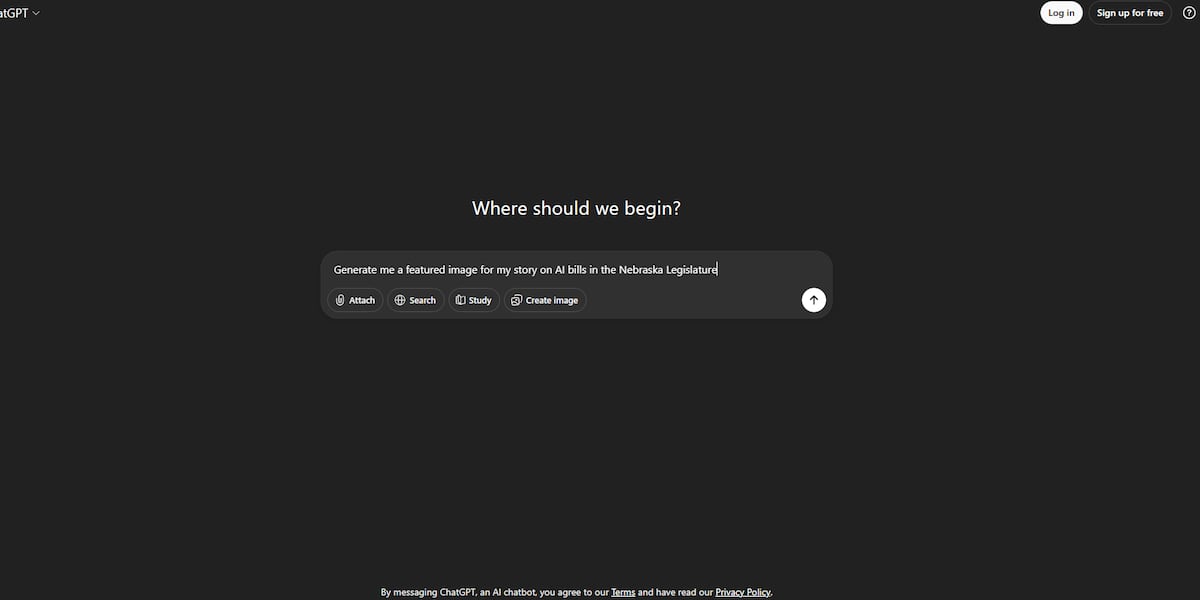

The more sophisticated AI becomes, the more it exposes the gaps between disciplines. Data scientists design models that optimize accuracy, while test engineers need solutions that optimize yield. Their respective priorities align only when both groups understand each other’s language.

Many companies discover this lesson after deployment. The first generation of AI projects in test analytics often relied on external consultants or isolated data science teams. Those efforts produced technically elegant models, but struggled in production because they lacked context about how test data was generated, what anomalies matter, and how limits were applied.

Successful teams are now building hybrid roles — engineers who understand data, and data scientists who understand test. The shared goal is not just model performance, but model relevance. That requires a workflow in which algorithmic insights loop back into engineering decisions, and human observations refine model parameters in turn.

“Understanding the data is very important,” said Teradyne’s Yu. “Engineers want to build a model with 100% accuracy, but it will never happen if they don’t have the domain knowledge and don’t understand the data.”

This collaboration is becoming the foundation for sustained AI maturity. As tools evolve, fabs are standardizing their pipelines for data ingestion, labeling, validation, and retraining. Each step adds transparency and control, transforming what was once an experimental practice into an engineering discipline.

Conclusion

The narrative around AI in test analytics has shifted from revolution to refinement. The early hype has given way to a more grounded understanding of what the technology can and cannot do. AI does not replace engineers. It scales them. It automates the repetitive, data-heavy layers of analysis so humans can focus on decisions that require judgment, context, and creativity.

The organizations enjoying the biggest benefits share several traits. They start with clean, well-characterized data; they integrate physics-based constraints into their models; they align data science with domain expertise, and they treat AI systems as continuously maintained assets rather than static tools. Each of these elements reinforces the others. Neglect one, and the system fails.

When implemented correctly, AI delivers measurable value through faster yield learning, reduced test time, improved equipment uptime, and earlier detection of process anomalies. But those benefits depend on structure, not serendipity. The difference between AI that works and AI that fails is less about algorithmic sophistication than operational discipline.