Visual Intelligence has proven to be one of the best additions of Apple Intelligence, transforming the camera lens of your iPhone into a search tool. And in iOS 26, the functionality gains new capacities that promise to make visual intelligence even more useful.

It is tempting to reject visual intelligence as Apple’s Google objective, but this underestimates what the capacity fueled by AI brings to the table. In the current iteration, you can use the camera control button on the iPhone 16 models or a central control shortcut on the iPhone 15 Pro to start the camera and take a photo of everything that attracted your interest. From there, you can do an online search for an image, get more information via the cat-in Apple intelligence, or even create a calendar entrance when you photograph something with dates and hours.

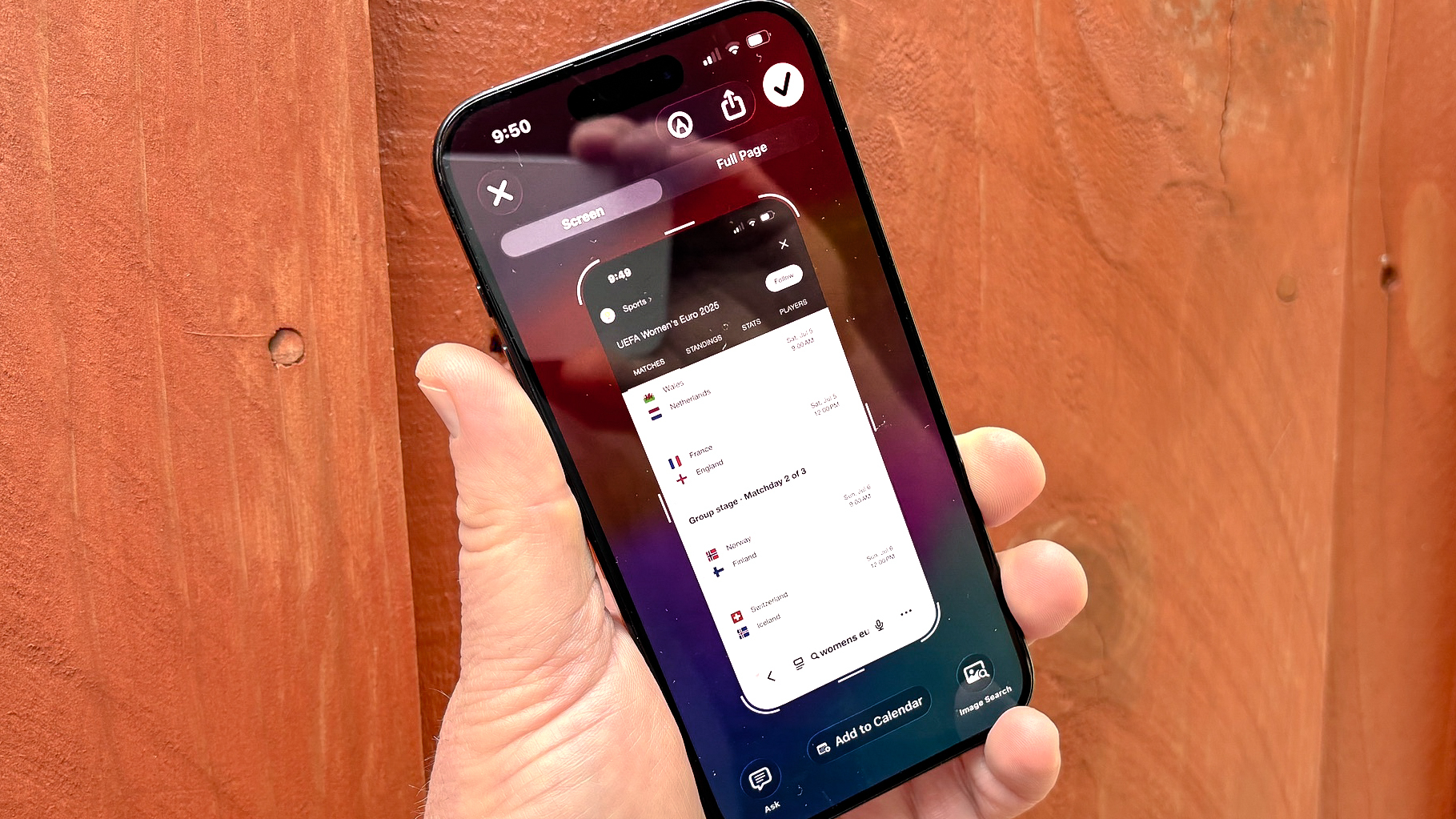

IOS 26 extends these capacities to research on the screen. All you have to do is take a screenshot, and the same visual intelligence commands that you use with the camera of your iPhone appear next to screenshot, simplifying searches or calendar entry creation.

The same restrictions on the current version of Visual Intelligence apply to the update version of iOS 26 – you will need an iPhone that supports Apple Intelligence to use this tool. But if you have a compatible phone, you will get new search capacities that are only a screenshot.

Here is what you will see when you try the updated visual intelligence, whether you have downloaded the beta version of the iOS 26 developer or if you wait for the public beta version to arrive this month to test the last iPhone software.

What’s new in visual intelligence in iOS 26

You can always use your iPhone camera to search for things with visual intelligence in iOS 26. But the software update extends these features to images and information on the screen captured via screenshots.

Simply take a screenshot of everything that enters your interest on your iPhone screen – as you probably know, this means pressing the power button and the upper volume button simultaneously – and a screenshot will appear as always. You can save it as regular screenshot by pressing the Check Check button in the upper right corner and saving the image in photos, files or a quick note. Besides this check mark, there are tools to share the screenshot and mark it.

But you will also notice other commands at the bottom of the screen. These are the new features of visual intelligence. From left to right, your options are requests, add to search for calendar and image.

Ask for taps in the chatgpt knowledge base to invoke more information on everything it is on your screen. There is also a research field where you can enter a more specific question. For example, I took a screenshot of the ESPN home page with a photo of the hot catering competition that takes place on July 4 and I used the request button to find out who won the most time competition.

Add to the calendar makes the time and date information of your iPhone screen and automatically generate an input for the iOS calendar application that you can change before saving. (And a good thing too, because visual intelligence does not always do things.

The search for images is quite simple. Press this command and AI will launch a Google search for the image in your screenshot. In my case, it happens to be an old console of games Tapper Arcade in case I have more money and nostalgia than meaning.

For the most part, this visual intelligence research that I referenced above was carried out using the entire screen, but you can highlight the specific thing you want to search using your finger – a bit as you can with the Circle To Search function for research now widespread on Android devices. I underlined the title of a Spanish newspaper and I was able to obtain an English translation. Yes, visual intelligence can also translate language into screenshots, just as you use your camera as a translation tool.

IOS 26 Visual intelligence impressions

It is important to remember that the new Visual Intelligence tools are in the beta phase, just like the rest of iOS 26. You could therefore meet hiccups when you use functionality.

For example, the first time I tried to create a calendar entrance for the Euro female championships, Visual Intelligence tried to start an entry for the current day instead of the match was really underway.

When this happens, use liberal icons from the thumb / inch upwards that Apple uses to form its AI tools. I typed the thumbs up, selected the date option is a bad option from a list provided on the feedback screen and sent it to Apple. I do not know if my comments had an immediate effect, but the next time I tried to create a calendar event, the date was properly generated automatically.

You can capture screenshots of your visual intelligence results, but it is not immediately intuitive how to save these screens. Once you have taken your screenshot, slide left to see the new blow, then press the check check in the upper corner to save everything. This is something that I am sure that I am going to get used to over time, but it’s a bit clumsy after years taking screenshots that automatically record the photo application.

It is an effort to make, however. As useful as the features of visual intelligence have been, remembering to use your camera to access it is not always the most natural thing to do. Being able to take a screenshot is more immediate, however, literally putting the capacities of visual intelligence at hand.