California legislators on Tuesday came closer to placing more railing around chatbots fueled by artificial intelligence.

The Senate adopted a bill that aims to make chatbots used for the company safer after parents have raised concerns that virtual characters have harmed the mental health of their children.

The legislation, which is now going to the California State Assembly, shows how state legislators address the security problems surrounding AI, because technological companies disclose more tools fueled by AI.

“The country looks again so that California directs,” said senator Steve Padilla (D-Chula Vista), one of the legislators who presented the bill on the Senate soil.

At the same time, legislators are trying to balance the concerns that they could hinder innovation. The groups opposed to the bill such as the Electronic Frontier Foundation claim that the legislation is too wide and encounters problems of freedom of expression, according to an analysis of the Senate of the bill.

As part of Bill 243 of the Senate, companion chatbot platform operators would remind users at least every three hours that virtual characters are not human. They would also reveal that companion chatbots may not suit some minors.

Platforms should also take other measures such as the implementation of a protocol to combat suicidal ideas, suicide or self -managed by users. This includes the demonstration of user suicide prevention resources.

Prevention of suicide and crisis consulting resources

If you or someone you know with suicidal thoughts, ask for help from a professional and call 9-8-8. The three-digit three-digit hotlines 988 from the United States, the Hotline 988, will connect the appellants to advisers trained in mental health. Text “house” at 741741 in the United States and Canada to reach the Crisis text line.

The operator of these platforms would also report the number of times that a companion chatbot has mentioned suicide ideas or actions with a user, as well as other requirements.

Dr. Akilah Weber Pierson, one of the co-authors of the bill, said that she supported innovation, but that she must also be accompanied by “ethical responsibility”. Chatbots said the senator, are designed to hold people’s attention, including children.

“When a child begins to prefer to interact with real human relations, it is very worrying,” said Senator Weber Pierson (D-La Mesa).

The bill defines companion chatbots as AI systems capable of meeting the social needs of users. It excludes the chatbots that companies use for customer service.

The legislation collected the support of the parents who lost their children after starting to chat with chatbots. One of these parents is Megan Garcia, a mother of Florida who continued Google and the character after his son Sewell Setzer III died by suicide last year.

In the trial, she alleys that the platform’s chatbots have harmed her son’s mental health and failed to inform him or offer help when he expressed suicidal thoughts to these virtual characters.

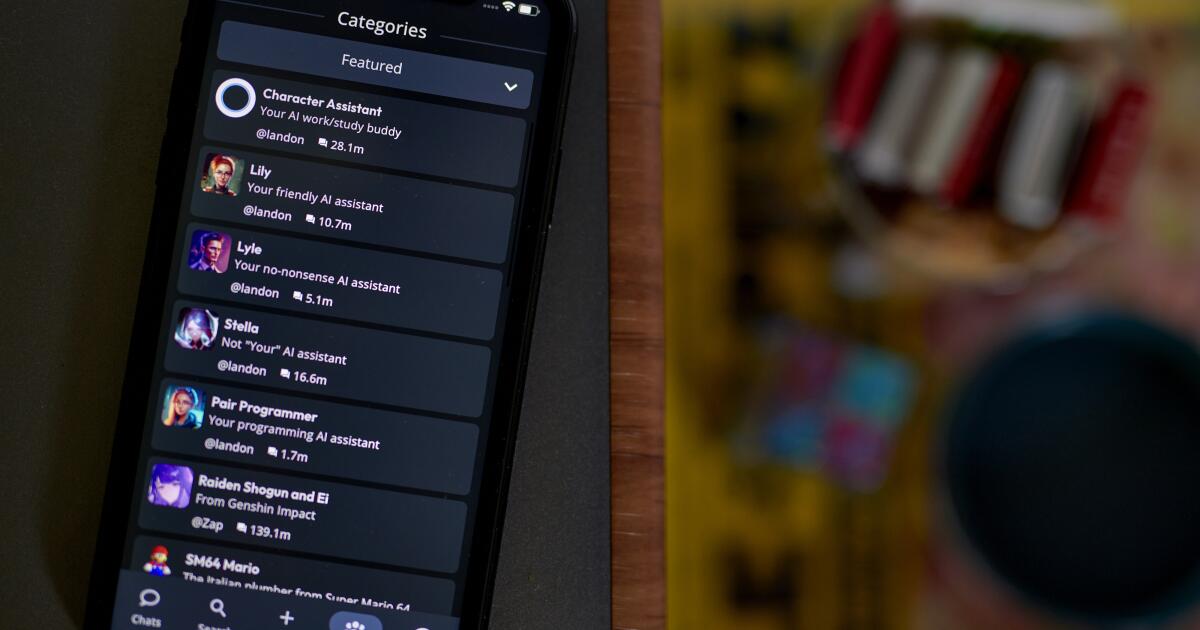

The character, based in Menlo Park, in California, is a platform where people can create and interact with digital characters who imitate real and fictitious people. The company said that it was taking the safety of adolescents seriously and deployed a functionality that gives parents more information on the time their children spend with chatbots on the platform.

The character asked a federal court to reject the trial, but a federal judge in May authorized the case to continue.