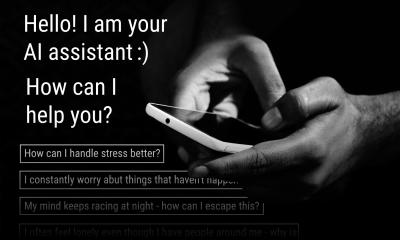

We need to ensure that these technologies are safe and protect users’ mental wellbeing rather than putting it at risk.

Falk Gerrik Verhees

The research team highlights that EU AI law’s requirement for transparency – simply informing users that they are interacting with AI – is not enough to protect vulnerable groups. They call for enforceable safety and monitoring standards, supported by voluntary guidelines to help developers implement safe design practices.

As a solution, they propose linking future AI applications with persistent chat memory to what is called a “guardian angel” or “good Samaritan AI” – an independent, supportive AI instance to protect the user and intervene if necessary. Such an AI agent could detect potential risks at an early stage and take preventive measures, for example by alerting users to support resources or issuing warnings about dangerous conversation patterns.

Recommendations for safe interaction with AI

In addition to implementing such safeguards, the researchers recommend rigorous age verification, age-specific protections, and mandatory risk assessments before market entry. “As clinicians, we see how language shapes human experience and mental health,” explains Falk Gerrik Verhees, psychiatrist at Dresden Carl Gustav Carus University Hospital. “AI characters use the same language to simulate trust and connection, which makes regulation essential. We need to ensure that these technologies are safe and protect users’ mental well-being rather than putting it at risk,” he adds.

Researchers say clear and enforceable standards are needed for mental health use cases. They recommend that LLMs clearly state that they are not an approved medical mental health tool. Chatbots should refrain from posing as therapists and limit themselves to basic, non-medical information. They must be able to recognize when professional assistance is needed and guide users to the appropriate resources. The effectiveness and application of these criteria could be guaranteed thanks to simple open access tools allowing continuous testing of the security of chatbots.

“The safeguards we propose are essential to ensure that general-purpose AI can be used safely and in useful and beneficial ways,” concludes Max Ostermann, researcher in Professor Gilbert’s Medical Device Regulatory Science team and first author of the publication in npj Digital Medicine.

Important note:

If you have a personal crisis, please seek help from a local crisis service, contact your GP, a psychiatrist/psychotherapist or, in an emergency, go to hospital. In Germany you can call 116 123 (in German) or search for online offers in your language at www.telefonseelsorge.de/internationale-hilfe.

Source: Technical University of Dresden