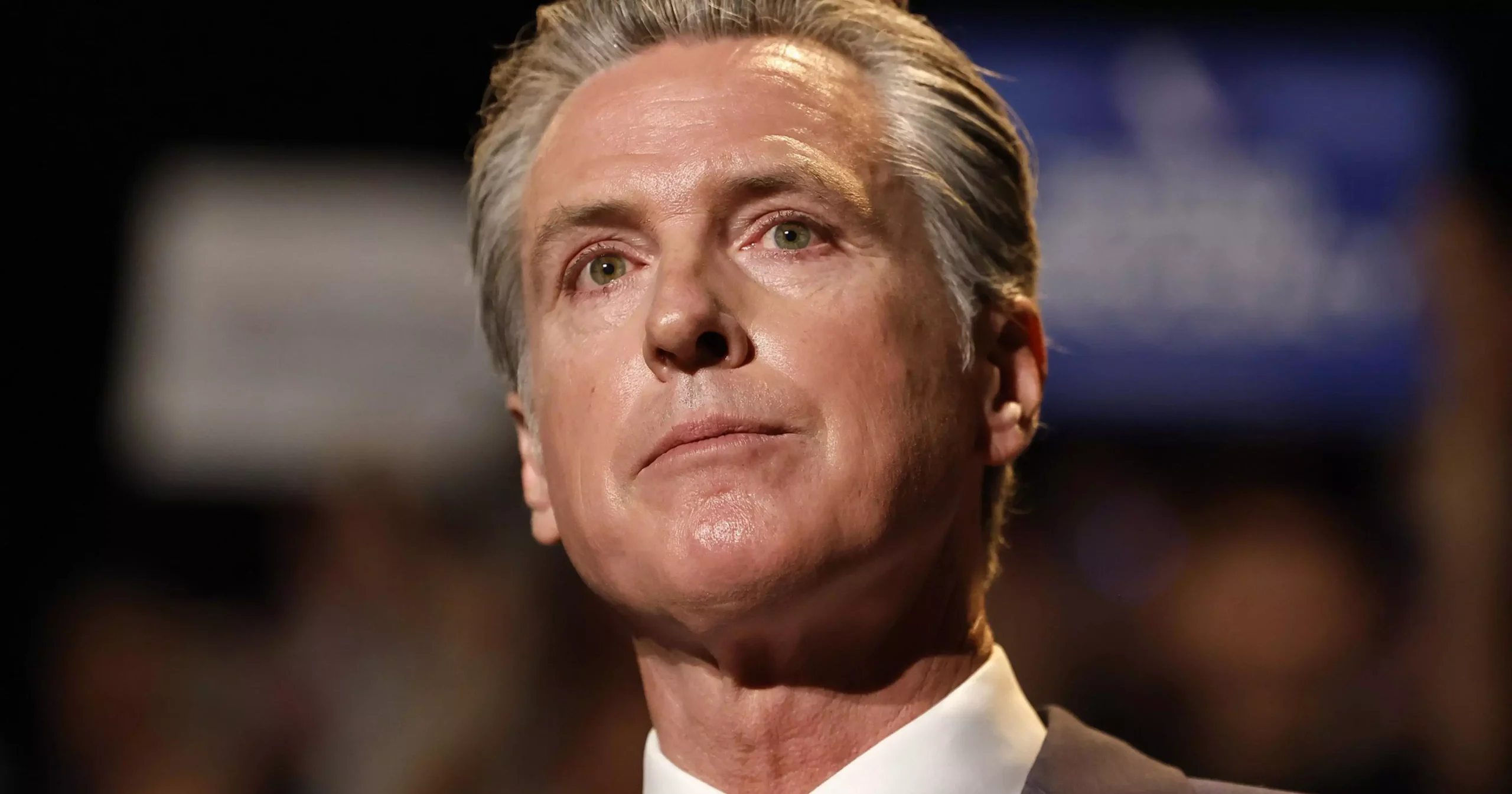

Governor Gavin Newsom signed the country’s first in -depth law on artificial intelligence on Monday, putting California in the driver’s headquarters on the regulation of a rapidly growing industry that the federal government has not addressed.

“California has proven that we can establish regulations to protect our communities while ensuring that the AI industry is growing continues to prosper”, ” Newsom said in a statement. “”This legislation establishes this balance. »»

Bill 53 of the Senate – written by the Senator of the Scott Wiener State (D -SAN Francisco) – establishes the transparency of the Artificial Intelligence Act, the most ambitious effort to date in the regulation of IA advanced systems. The law will be deployed in phases, from January.

The signing of Newsom on SB 53 comes after having vetoed a more aggressive Wiener bill last year which would have imposed more severe sanctions on the bad actors of the AI. This bill has been opposed by many most powerful technological companies in Silicon Valley. In his veto message, Newsom has created an AI expert working group to design a framework that was used to write SB 53 and other AI -related invoices this year. Their recommendations focused more on transparency and attenuation of risks than on penalty companies.

Many of the biggest players in Tech – Meta, Alphabet, Openai and The Trade Group Technet – put pressure against SB 53, saying that they prefer uniform rules at the federal level.

Federal legislators did not address the issue, but President Donald Trump published an “AI action plan” this summer which called for a moratorium on AI regulation by states, which many considered a gift for technological companies.

SB 53 supporters said in the absence of federal leadership on AI, California was responsible for acting.

“With a technology as transformative as AI, we are responsible for supporting this innovation while setting up common sense railings to understand and reduce risks,” Wiener said in a statement. “With this law, California intensifies, once again, as a world leader in technological innovation and security.”

Although some technological companies have always opposed this year, Anthropic, a security and research company on AI based in San Francisco and the defenders of technological security supported SB 53.

“This is a key victory for the growing movement in California and across the country to hold the major technological CEOs responsible for their products, apply basic railings to the development and deployment of AI and the ability of denunciators to advance when something is wrong,” said Sacha Haworth, Executive Director of Tech Oversight California.

Companies whose headquarters are outside California may not be as deeply affected, but supporters of the bill have said they had a national and global impact.

In a May report, the governor’s office noted that 32 of the 50 best IA companies in the world call California at home. The new law creates not only a framework for national legislation, but also pre -empt the city and county agencies in California to promulgate contradictory rules.

The new law targets “border models”, which are formed with enormous computing power that Wiener and SB 53 supporters say that pose risks such as activation of cyberattacks, the creation of dangerous weapons or functioning beyond human control. The law also applies to “major border developers”, which are defined as IA companies with more than $ 500 million in annual income.

Under the law, major border developers will have to create and follow a robust IA security framework which implements national and international practices. This framework must be published online and updated each year. Before publishing or considerably modifying a border model, companies will have to publish public transparency reports describing model capacities, planned uses, limits and results of risk assessments.

The bill assigns a major surveillance role at the Emergency Services Office (ESO). Developers must regularly submit summaries of catastrophic risk assessments to the State Agency and report any critical and urgent security incident. From 2027, OES will publish anonymized anonymized summaries of security incidents.

Non-compliance, including not reporting or making false declarations, could trigger civil sanctions up to $ 1 million per violation.

The law also includes denunciation protections which protect employees from reprisals if they disclose security problems or violations to the federal or federal authorities. Large developers must provide internal systems to employees to report anonymous concerns and provide updates to how these concerns are dealt with.

Beyond the surveillance of private companies, the bill also creates a consortium to design Calcompute, a public cloud platform supported by the State which would extend access to high-power IT resources for universities, researchers and projects of public interest. The system of the University of California will be a priority in the management of the consortium, which will be responsible for presenting a framework by 2027.

In addition to putting California in the driver’s headquarters on AI regulation, the new law gives Newsom – a probable presidential candidate in 2028 – a key discussion subject on the campaign track.