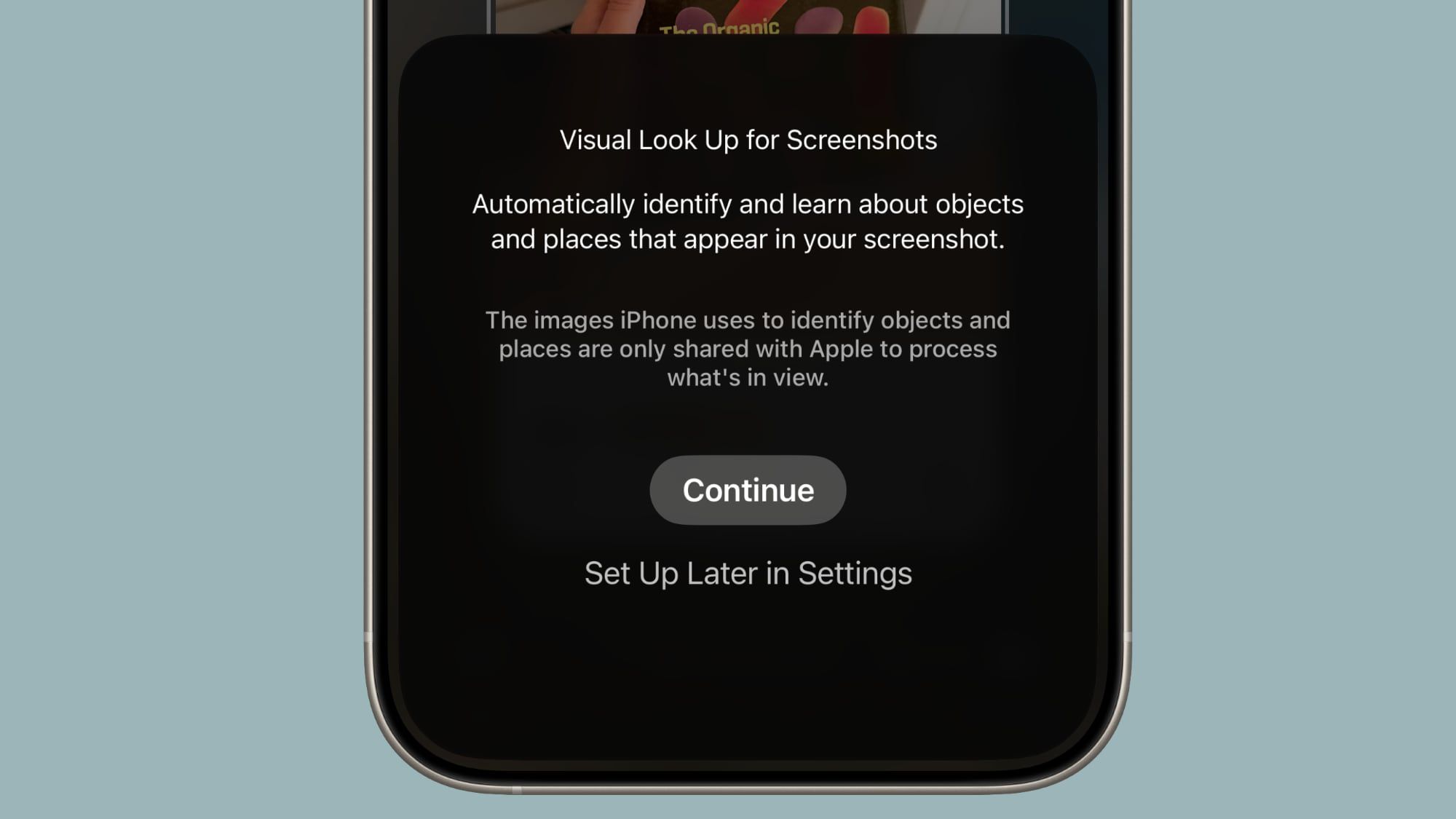

Visual Intelligence, Apple Intelligence’s feature that Apple introduced last year, has new capacities in iOS 26 which make it more useful and better able to compete with the functionalities available via certain Android smartphones.

Consciousness on the screen

In iOS 18, visual Intelligence only works with the camera, but in ios 26, it also works with what is on your device. You can capture a screenshot of what is on your screen, then use visual intelligence above to identify what you are looking at, find images and get more information via chatgpt.

How to use visual intelligence on the screen

Visual Intelligence for screenshots works roughly the same as visual Intelligence with the application of the camera, but it is located in the screenshot interface. Take a screenshot (keep the volume up button and the side button), then press the marking interface if it is displayed.

To get out of the markup (which is the default), press the Little Pen icon at the top of the screen. From there, you should see the visual intelligence visual options.

Highlight

With highlighting to find the consciousness of the content on the screen, you can use a finger to draw the object in the screenshot you want to search. It is similar to the Android Circle To Search function.

The highlighting of research allows you to carry out an image search for a specific object in a screenshot, even if there are several things in the image. He uses Google Image Search by default, but Apple has shown the functionality operating with other applications like Etsy during his Keynote event. Applications will probably have to add functionality management.

In some cases, visual intelligence will identify individual objects in itself in an image, and you can press without needing to use the overcome to search. This is similar to the object identification function in the Photos application, but it leads to an image search.

Request and search

If you don’t need to isolate an object in your screenshot, you can just press the request button to ask questions about what you see. The questions will be relayed to Chatgpt, and Chatgpt will provide the information. The search button asks Google Search for more information.

As with standard visual search, if your screenshot includes dates, hours and related information for an event, it can be added directly to your calendar.

New object identification

Apple did not mention it, but visual Intelligence adds management for the rapid identification of new types of objects. He can now identify art, books, benchmarks, natural landmarks and sculptures, in addition to animals and plants on which he has been able to provide information before.

If you use visual intelligence On an object it is able to recognize, you will see a small brilliant icon appear. The tapping reveals information about what is in sight. What is treated in this aspect of visual intelligence is that it works with the view of the live camera or with a broken photo.

For standard request and research requests using visual Intelligence, you must take a photo so that it can be relayed to sources like Chatgpt or Google Image Search. Art, books, benchmarks, natural monuments, sculptures, plants and animals can be identified on devices without contacting another service.

Compatibility

Visual Intelligence is limited to the devices that support apple Intelligence, which includes the iPhone 15 Pro models and the iPhone 16 models. It is activated by a long press the camera control button on the devices that have a camera control, or using the action button or a control of the control center.

Launch date

Ios 26 is currently in beta, but it will be launched to the public in September.