Apple finally catches Android with a functionality published on the Pixel 6 line in 2021

The Hold Assist function offers the iPhone monitoring your call if you are put on hold and alerts you when the other party returns to the line. | Image Credit-phonearena

It is excellent feature and that I missed a lot when I returned to the iPhone. Today, during the WWDC Keynote, Apple announced its Hold version for me that it calls Hold Assist. Let’s say you need to call an airline to ask a question about a reservation you made. When you are pending, you can ask your iPhone to sit through silence while you return to your work. When an agent is ready to help you, you will be informed so that you can give your attention to the call.

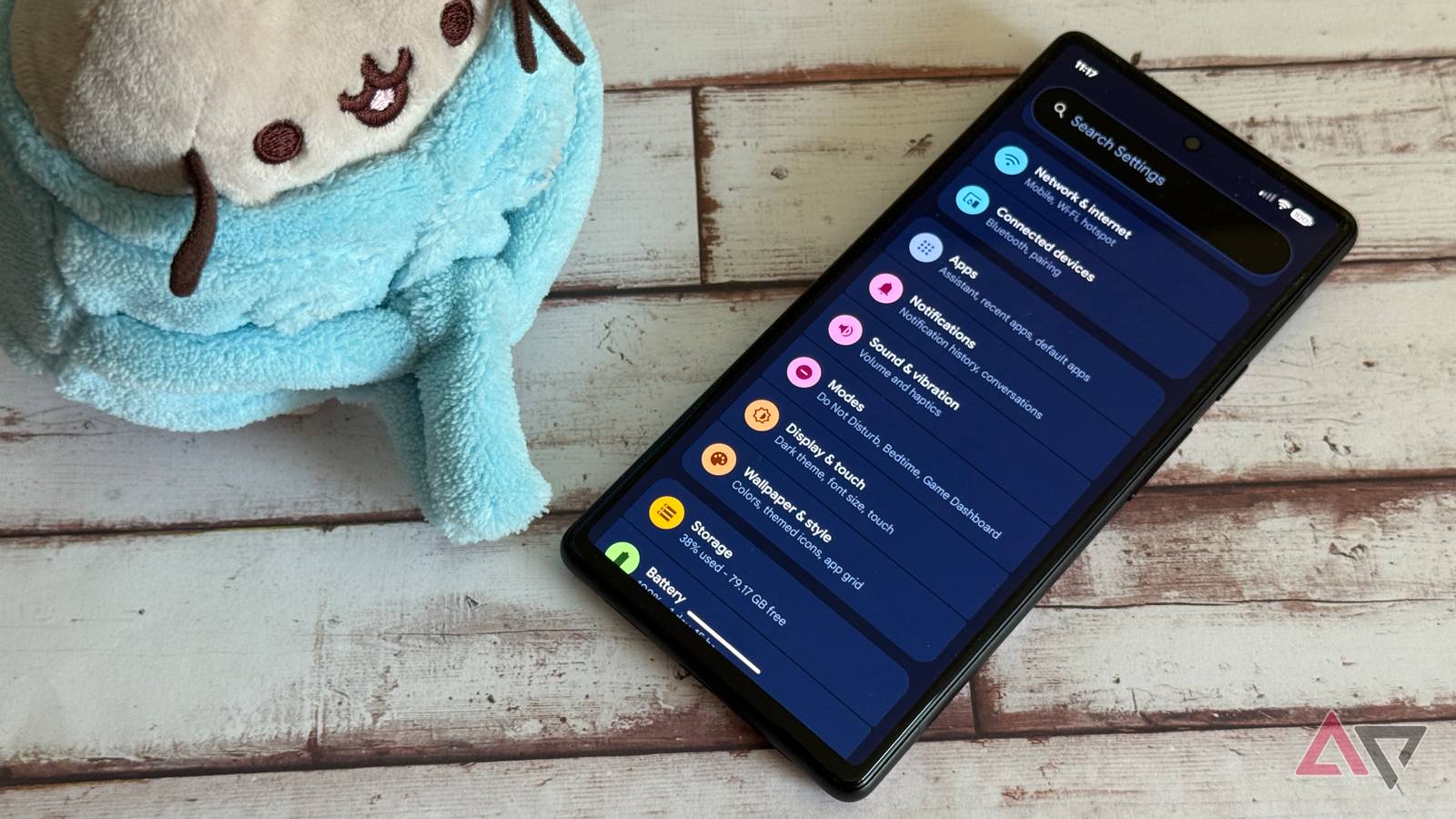

During a call, press the button on the screen displaying the dial. This is the button with a three -point icon. Press this button offers you several options with the last as support for assistance. Press Hold Assist and you will see in the dynamic island that the functionality has been activated, the call is pending and that you will receive a notification when a living person is at stake.

Live translation allows you to have real -time communications with someone who does not speak your language. | Image Apple

Apple’s new live translation feature is similar to the live translation of Samsung. | Image Credit-phonearena

Keeping technology available allows spoken or written conversations to stay private. Your response is translated in real time in the language of the other part allowing a transparent bidirectional conversation.

Finally, a feature on pixel models called the Google call screen uses AI and asks an appellant to reveal his name and the reason to call before connecting the call. Apple now has a similar feature that he calls calls to screen. The idea is to find enough appellant information to allow the AI to decide to block the call or let it go.

Call Screenor asks the appellants questions to see if their call should make. | Image

As with Hold Assist, iPhone users will find the translation live and call screening to be extremely useful features. I am happy to see them available on iOS. As for this myth, how can someone not see that it is one thing. This shows you the difference between Google and Apple with regard to their operating systems. Android is changed to improve the user experience. Apple waits before it adds these new useful features, then offers a somewhat similar name. However, after saying, I am happy to see Apple add these features to iOS and I can’t wait to use them.